Various ways to implement a daemon that pings remote hosts.

| images | Add a version that uses a lock instead of a queue. | 2019-07-06 11:32:09 |

|---|---|---|

| LICENSE.txt | first commit. | 2019-07-05 20:08:07 |

| README.md | Update the README.md a little bit. | 2019-07-06 11:37:15 |

| long_lived_looping_locked_workers.py | Add a version that uses a lock instead of a queue. | 2019-07-06 11:32:09 |

| long_lived_looping_workers.py | first commit. | 2019-07-05 20:08:07 |

| long_lived_worker_queue.py | first commit. | 2019-07-05 20:08:07 |

| short_lived_workers.py | first commit. | 2019-07-05 20:08:07 |

python_pinger

These are different approaches to how one might implement a daemon to repeatedly ping multiple hosts to see if the network is up.

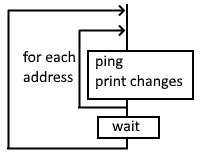

Single Threaded

This is the most naive implementation. For each ping host, the main process thread pings them one at a time, and prints any changes.

It's so simple that I'm not going to provide a sample source file, but for the others below, I will.

Upsides

Really simple code.

Downsides

Since the pings are serialized, one long timeout from one host could affect detecting a problem at another host.

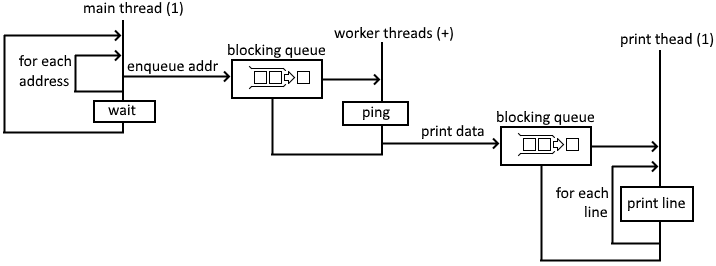

Long Lived Workers Consuming from Queue

Raymond Hettinger's PyBay 2018 Keynote uses the queue module to send data between threads, so I thought I'd make a version of the pinger that did the same.

The main thread sends an address to a queue that the worker threads wait upon.

See the source: long_lived_worker_queue.py

Upsides

Multi-threaded ping calls won't block each other.

Downsides

The ping tasks read from, and write to a shared dictionary. (Reading and updating the last status.)

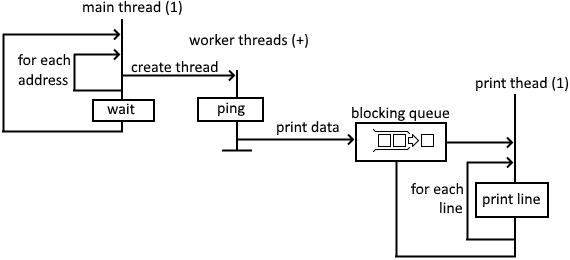

Short Lived Workers

How about we don't keep the workers around, and only spawn them when there's something to do? That way, we won't waste memory when there's nothing going on. The main thread passes the address to the workers when they're constructed.

See the source: short_lived_workers.py

Upsides

The worker tasks aren't in memory if they're not doing anything. So usually a smaller memory profile.

Downsides

The ping tasks still read from, and write to a shared dictionary. (Reading and updating the last status.)

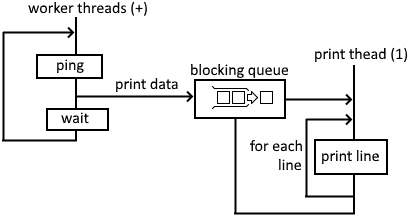

Long Lived Looping Workers

I saved the best for last. The only thing the main thread does is bring the workers and the print manager to life. The workers each independently do their own loop: ping, compare, print, and wait.

See the source: long_lived_looping_workers.py

Upsides

No more race conditions! The worker threads mind their own business.

Downsides

The worker threads remain in memory.

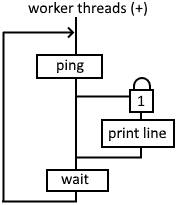

Long Lived Looping Locked Workers

That was fun using only the synchronized queue class and no locks. But now that we've got the long lived looping workers that don't need their own queue, let's replace the print manager with a threading lock.

See the source: long_lived_looping_locked_workers.py

Upsides

Got rid of the entire printer thread.

Downsides

Uses a lock, which in more complex applications with multiple locks becomes difficult to reason about.

Is it any good?

Yes.

Licence

This software uses the MIT license.